This is the multi-page printable view of this section. Click here to print.

Kubernetes

- 1: Architecture

- 2: Deployments

- 3: Installing Kubernetes

- 4: Kind

- 5: kubectl

- 6: Minikube

- 7: Pod

- 8: Secrets

- 9: Troubleshooting

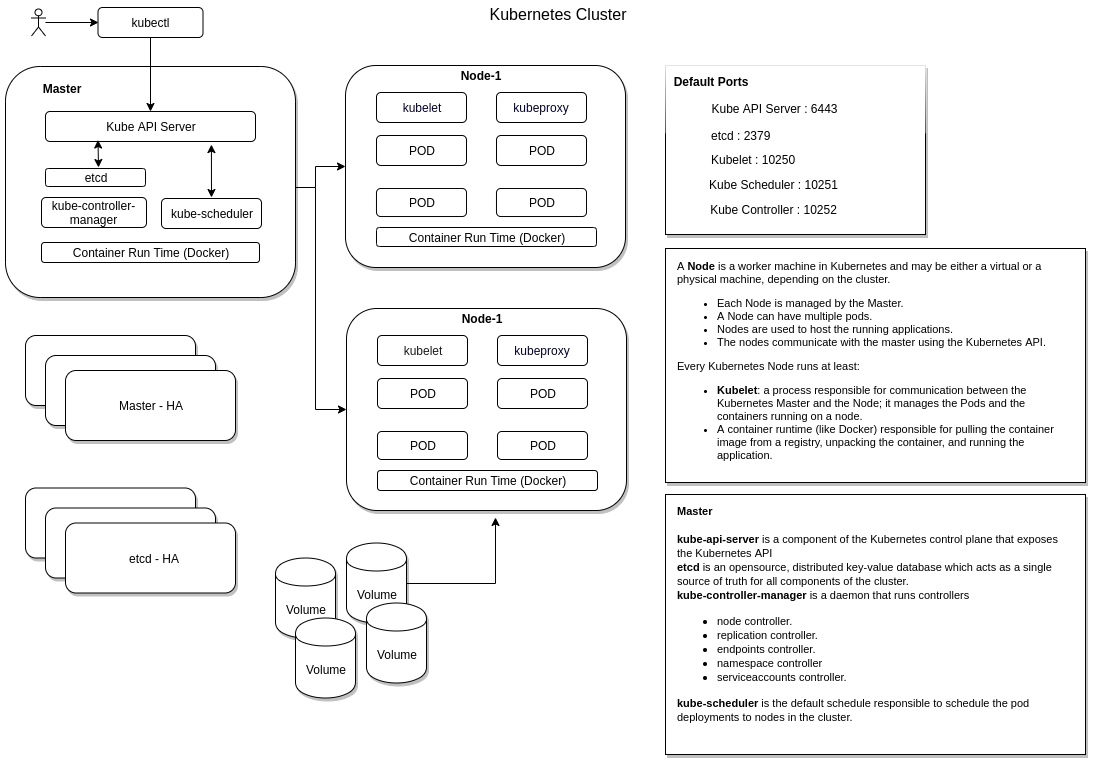

1 - Architecture

kubernetes cluster components

Master

- Masters is responsible for

- Managing the cluster.

- Scheduling the deployments.

- Exposing the kubernetes API.

- Kubernetes master automatically handles scheduling the pods across the Nodes in the cluster.

- The Master’s automatic scheduling takes into account the available resources on each Node.

Node

- A Node is a worker machine in Kubernetes and may be either a virtual or a physical machine, depending on the cluster.

- Each Node is managed by the Master.

- A Node can have multiple pods.

- Nodes are used to host the running applications.

- The nodes communicate with the master using the Kubernetes API.

Every Kubernetes Node runs at least:

Kubelet : process responsible for communication between the Kubernetes Master and the Node; it manages the Pods and the containers running on a machine.

A container runtime (like Docker) responsible for pulling the container image from a registry, unpacking the container, and running the application.

kube api-server

todo

Kube scheduler

todo

Controller manager

The Kubernetes controller manager is a daemon that embeds the core control loops shipped with Kubernetes.

a controller is a control loop that watches the shared state of the cluster through the apiserver and makes changes attempting to move the current state towards the desired state.

Examples of controllers that ship with Kubernetes:

- node controller.

- replication controller

- endpoints controller

- namespace controller

- serviceaccounts controller

etcd

etcd is an opensource, distributed key-value database which acts as a single source of truth for all components of the cluster.

Daemon Set

Daemon set will ensure one copy/instance of pod is present on all the nodes.

UseCases:

- kube-proxy

- Log Viewer

- Monitoring Agent

- Networking Solution (Weave-net)

2 - Deployments

Deployments in Kubernetes

The Deployment instructs Kubernetes how to create and update instances of your application.

The Kubernetes master schedules mentioned application instances onto individual Nodes in the cluster.

Once the application instances are created, a Kubernetes Deployment Controller continuously monitors those instances.

Deployment

$ kubectl create deployment hello-node --image=gcr.io/hello-minikube-zero-install/hello-node

deployment.apps/hello-node created

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 1/1 1 1 19s

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-node-55b49fb9f8-bkmnb 1/1 Running 0 43s

$ kubectl get events

LAST SEEN TYPE REASON OBJECT MESSAGE

94s Normal Scheduled pod/hello-node-55b49fb9f8-bkmnb Successfully assigned default/hello-node-55b49fb9f8-bkmnb to minikube

92s Normal Pulling pod/hello-node-55b49fb9f8-bkmnb Pulling image "gcr.io/hello-minikube-zero-install/hello-node"

91s Normal Pulled pod/hello-node-55b49fb9f8-bkmnb Successfully pulled image "gcr.io/hello-minikube-zero-install/hello-node"

90s Normal Created pod/hello-node-55b49fb9f8-bkmnb Created container hello-node

90s Normal Started pod/hello-node-55b49fb9f8-bkmnb Started container hello-node

94s Normal SuccessfulCreate replicaset/hello-node-55b49fb9f8 Created pod: hello-node-55b49fb9f8-bkmnb

94s Normal ScalingReplicaSet deployment/hello-node Scaled up replica set hello-node-55b49fb9f8 to 1

4m34s Normal NodeHasSufficientMemory node/minikube Node minikube status is now: NodeHasSufficientMemory

4m34s Normal NodeHasNoDiskPressure node/minikube Node minikube status is now: NodeHasNoDiskPressure

4m34s Normal NodeHasSufficientPID node/minikube Node minikube status is now: NodeHasSufficientPID

4m11s Normal RegisteredNode node/minikube Node minikube event: Registered Node minikube in Controller

4m6s Normal Starting node/minikube Starting kube-proxy.

$ kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority: /root/.minikube/ca.crt

server: https://172.17.0.27:8443

name: minikube

contexts:

- context:

cluster: minikube

user: minikube

name: minikube

current-context: minikube

kind: Config

preferences: {}

users:

- name: minikube

user:

client-certificate: /root/.minikube/client.crt

client-key: /root/.minikube/client.key

Nexus Deployment

kubectl run nexus --image=sonatype/nexus3:3.2.1 --port 8081

Expose service:kubectl expose deployment nexus --type=NodePort

Access the service:

Minikube service nexus

nginx deployment

# nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

$ kubectl apply -f nginx-deployment.yaml

deployment.apps/nginx-deployment created

$ kubectl get events

$ kubectl get pods

$ kubectl delete deployment nginx-deployment

deployment.extensions "nginx-deployment" deleted

Cleanup

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 1/1 1 1 4m16s

$ kubectl delete deployment hello-node

deployment.extensions "hello-node" deleted

$ kubectl get deployments

No resources found.

$ kubectl get pods

No resources found.

3 - Installing Kubernetes

[This page is under construction …]

I am going to cover installation of kubernetes in two ways as mentioned below:

- Install kubernetes with kubeadm

- Install kubernetes the hard way

Prerequisites to install kubernetes with kubeadm

- VirtualBox

- Centos Image

- Virtual Machine with min 2 CPU

Install kubelet, kubectl and kubeadm

Installing-kubeadm-kubelet-and-kubectl

# This script is the modified version from k8s documentation

RELEASE="$(curl -sSL https://dl.k8s.io/release/stable.txt)"

mkdir -p /usr/bin

cd /usr/bin

curl -L --remote-name-all https://storage.googleapis.com/kubernetes-release/release/${RELEASE}/bin/linux/amd64/{kubeadm,kubelet,kubectl}

chmod +x {kubeadm,kubelet,kubectl}

curl -sSL "https://raw.githubusercontent.com/kubernetes/kubernetes/${RELEASE}/build/debs/kubelet.service" > /etc/systemd/system/kubelet.service

mkdir -p /etc/systemd/system/kubelet.service.d

curl -sSL "https://raw.githubusercontent.com/kubernetes/kubernetes/${RELEASE}/build/debs/10-kubeadm.conf" > /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

At this stage, kubelet service will fail to start as the initialization did not happen and /var/lib/kubelet/config.yaml is not yet created.

kubeadm init

[root@10 ~]# swapoff -a

[root@10 ~]# kubeadm init

[init] Using Kubernetes version: v1.15.3

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [10.0.2.15 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.2.15]

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [10.0.2.15 localhost] and IPs [10.0.2.15 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [10.0.2.15 localhost] and IPs [10.0.2.15 127.0.0.1 ::1]

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 20.014010 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.15" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node 10.0.2.15 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node 10.0.2.15 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: n2e7ii.lp571oh88qidwzdj

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.2.15:6443 --token n2e7ii.lp571oh88qidwzdj \

--discovery-token-ca-cert-hash sha256:0957baa4cdea8fda244c159cf2a038a2afe2c0b20fb922014472c5c7918dac81

kubelet service

[root@10 ~]# service kubelet status

Redirecting to /bin/systemctl status kubelet.service

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since zo 2019-09-01 21:04:42 CEST; 1min 9s ago

Docs: http://kubernetes.io/docs/

Main PID: 4682 (kubelet)

CGroup: /system.slice/kubelet.service

└─4682 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --c...

sep 01 21:05:35 10.0.2.15 kubelet[4682]: E0901 21:05:35.780354 4682 summary_sys_containers.go:47] Failed to get system container stats for ...

sep 01 21:05:35 10.0.2.15 kubelet[4682]: E0901 21:05:35.780463 4682 summary_sys_containers.go:47] Failed to get system container stats for ...

sep 01 21:05:37 10.0.2.15 kubelet[4682]: W0901 21:05:37.277274 4682 cni.go:213] Unable to update cni config: No networks found in /...ni/net.d

sep 01 21:05:40 10.0.2.15 kubelet[4682]: E0901 21:05:40.725221 4682 kubelet.go:2169] Container runtime network not ready: NetworkRe...tialized

sep 01 21:05:42 10.0.2.15 kubelet[4682]: W0901 21:05:42.277894 4682 cni.go:213] Unable to update cni config: No networks found in /...ni/net.d

sep 01 21:05:45 10.0.2.15 kubelet[4682]: E0901 21:05:45.728937 4682 kubelet.go:2169] Container runtime network not ready: NetworkRe...tialized

sep 01 21:05:45 10.0.2.15 kubelet[4682]: E0901 21:05:45.813742 4682 summary_sys_containers.go:47] Failed to get system container stats for ...

sep 01 21:05:45 10.0.2.15 kubelet[4682]: E0901 21:05:45.813868 4682 summary_sys_containers.go:47] Failed to get system container stats for ...

sep 01 21:05:47 10.0.2.15 kubelet[4682]: W0901 21:05:47.278690 4682 cni.go:213] Unable to update cni config: No networks found in /...ni/net.d

sep 01 21:05:50 10.0.2.15 kubelet[4682]: E0901 21:05:50.733668 4682 kubelet.go:2169] Container runtime network not ready: NetworkRe...tialized

Hint: Some lines were ellipsized, use -l to show in full.

#run the following as a regular user, to start using the cluster

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@10 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.0.2.15 NotReady master 6m38s v1.15.3

Deploy Weave Network for networking

Weave uses POD CIDR of 10.32.0.0/12 by default.

[root@10 ~]# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

serviceaccount/weave-net created

clusterrole.rbac.authorization.k8s.io/weave-net created

clusterrolebinding.rbac.authorization.k8s.io/weave-net created

role.rbac.authorization.k8s.io/weave-net created

rolebinding.rbac.authorization.k8s.io/weave-net created

daemonset.extensions/weave-net created

# kubernetes master is now in Ready status after deploying weave-net

[root@10 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.0.2.15 Ready master 4d v1.15.3

Verification

[root@10 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5644d7b6d9-9gp2m 0/1 Running 0 23m

coredns-5644d7b6d9-x5f9f 0/1 Running 0 23m

etcd-10.0.2.15 1/1 Running 0 22m

kube-apiserver-10.0.2.15 1/1 Running 0 22m

kube-controller-manager-10.0.2.15 1/1 Running 0 22m

kube-proxy-xw6mq 1/1 Running 0 23m

kube-scheduler-10.0.2.15 1/1 Running 0 22m

weave-net-8hmqc 2/2 Running 0 2m6s

[root@10 ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5644d7b6d9-9gp2m 0/1 Running 0 23m

kube-system coredns-5644d7b6d9-x5f9f 0/1 Running 0 23m

kube-system etcd-10.0.2.15 1/1 Running 0 22m

kube-system kube-apiserver-10.0.2.15 1/1 Running 0 22m

kube-system kube-controller-manager-10.0.2.15 1/1 Running 0 22m

kube-system kube-proxy-xw6mq 1/1 Running 0 23m

kube-system kube-scheduler-10.0.2.15 1/1 Running 0 22m

kube-system weave-net-8hmqc 2/2 Running 0 2m9s

[root@10 ~]# kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

[root@10 ~]# kubectl get events

LAST SEEN TYPE REASON OBJECT MESSAGE

4d Normal NodeHasSufficientMemory node/10.0.2.15 Node 10.0.2.15 status is now: NodeHasSufficientMemory

4d Normal NodeHasNoDiskPressure node/10.0.2.15 Node 10.0.2.15 status is now: NodeHasNoDiskPressure

4d Normal NodeHasSufficientPID node/10.0.2.15 Node 10.0.2.15 status is now: NodeHasSufficientPID

4d Normal RegisteredNode node/10.0.2.15 Node 10.0.2.15 event: Registered Node 10.0.2.15 in Controller

4d Normal Starting node/10.0.2.15 Starting kube-proxy.

38m Normal Starting node/10.0.2.15 Starting kubelet.

38m Normal NodeHasSufficientMemory node/10.0.2.15 Node 10.0.2.15 status is now: NodeHasSufficientMemory

38m Normal NodeHasNoDiskPressure node/10.0.2.15 Node 10.0.2.15 status is now: NodeHasNoDiskPressure

38m Normal NodeHasSufficientPID node/10.0.2.15 Node 10.0.2.15 status is now: NodeHasSufficientPID

38m Normal NodeAllocatableEnforced node/10.0.2.15 Updated Node Allocatable limit across pods

38m Normal Starting node/10.0.2.15 Starting kube-proxy.

38m Normal RegisteredNode node/10.0.2.15 Node 10.0.2.15 event: Registered Node 10.0.2.15 in Controller

12m Normal NodeReady node/10.0.2.15 Node 10.0.2.15 status is now: NodeReady

etc/kubernetes

[root@10 kubernetes]# tree /etc/kubernetes

/etc/kubernetes

├── admin.conf

├── controller-manager.conf

├── kubelet.conf

├── manifests

│ ├── etcd.yaml

│ ├── kube-apiserver.yaml

│ ├── kube-controller-manager.yaml

│ └── kube-scheduler.yaml

├── pki

│ ├── apiserver.crt

│ ├── apiserver-etcd-client.crt

│ ├── apiserver-etcd-client.key

│ ├── apiserver.key

│ ├── apiserver-kubelet-client.crt

│ ├── apiserver-kubelet-client.key

│ ├── ca.crt

│ ├── ca.key

│ ├── etcd

│ │ ├── ca.crt

│ │ ├── ca.key

│ │ ├── healthcheck-client.crt

│ │ ├── healthcheck-client.key

│ │ ├── peer.crt

│ │ ├── peer.key

│ │ ├── server.crt

│ │ └── server.key

│ ├── front-proxy-ca.crt

│ ├── front-proxy-ca.key

│ ├── front-proxy-client.crt

│ ├── front-proxy-client.key

│ ├── sa.key

│ └── sa.pub

└── scheduler.conf

3 directories, 30 files

Docker images for kubernetes cluster

[root@10 kubernetes]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.15.3 232b5c793146 13 days ago 82.4 MB

k8s.gcr.io/kube-apiserver v1.15.3 5eb2d3fc7a44 13 days ago 207 MB

k8s.gcr.io/kube-scheduler v1.15.3 703f9c69a5d5 13 days ago 81.1 MB

k8s.gcr.io/kube-controller-manager v1.15.3 e77c31de5547 13 days ago 159 MB

k8s.gcr.io/coredns 1.3.1 eb516548c180 7 months ago 40.3 MB

k8s.gcr.io/etcd 3.3.10 2c4adeb21b4f 9 months ago 258 MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 20 months ago 742 kB

kubeadm reset

[root@10 ~]# kubeadm reset

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

[reset] Removing info for node "10.0.2.15" from the ConfigMap "kubeadm-config" in the "kube-system" Namespace

W0901 11:56:16.802756 29062 removeetcdmember.go:61] [reset] failed to remove etcd member: error syncing endpoints with etc: etcdclient: no available endpoints

.Please manually remove this etcd member using etcdctl

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/etcd /var/lib/kubelet /etc/cni/net.d /var/lib/dockershim /var/run/kubernetes]

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually.

For example:

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

[root@10 ~]#

Start k8s cluster after system reboot

# swapoff -a

# systemctl start kubelet

# systemctl status kubelet

kubeadm join token

# This command will create a new token and display the connection string

[root@10 ~]# kubeadm token create --print-join-command

kubeadm join 10.0.2.15:6443 --token 1clhim.pk9teustr2v1gnu2 --discovery-token-ca-cert-hash sha256:efe18b97c7a320e7173238af7126e33ebe76f3877255c8f9aa055f292dbf3755

# Other toke commands

kubeadm token list

kubeadm token delete <TOKEN>

Troubleshooting

kubelet service not starting

# vi /var/log/messages

Sep 1 20:57:25 localhost kubelet: F0901 20:57:25.706063 2874 server.go:198] failed to load Kubelet config file /var/lib/kubelet/config.yaml, error failed to read kubelet config file "/var/lib/kubelet/config.yaml", error: open /var/lib/kubelet/config.yaml: no such file or directory

Sep 1 20:57:25 localhost systemd: kubelet.service: main process exited, code=exited, status=255/n/a

Sep 1 20:57:25 localhost systemd: Unit kubelet.service entered failed state.

Sep 1 20:57:25 localhost systemd: kubelet.service failed.

Sep 1 20:57:35 localhost systemd: kubelet.service holdoff time over, scheduling restart.

Sep 1 20:57:35 localhost systemd: Stopped kubelet: The Kubernetes Node Agent.

Sep 1 20:57:35 localhost systemd: Started kubelet: The Kubernetes Node Agent.

Sep 1 20:57:35 localhost kubelet: F0901 20:57:35.968939 2882 server.go:198] failed to load Kubelet config file /var/lib/kubelet/config.yaml, error failed to read kubelet config file "/var/lib/kubelet/config.yaml", error: open /var/lib/kubelet/config.yaml: no such file or directory

Sep 1 20:57:35 localhost systemd: kubelet.service: main process exited, code=exited, status=255/n/a

Sep 1 20:57:35 localhost systemd: Unit kubelet.service entered failed state.

Sep 1 20:57:35 localhost systemd: kubelet.service failed.

Check journal for kubelet messages

journalctl -xeu kubelet

References

4 - Kind

kind : kubernetes in docker

# Set go path, kind path and KUBECONFIG path

export PATH=$PATH:$HOME/go/bin:$HOME/k8s/bin

kind get kubeconfig-path)

# output: /home/sriram/.kube/kind-config-kind

export KUBECONFIG="$(kind get kubeconfig-path)"

sriram@sriram-Inspiron-5567:~$ kind create cluster

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.15.0) 🖼

✓ Preparing nodes 📦

✓ Creating kubeadm config 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Cluster creation complete. You can now use the cluster with:

export KUBECONFIG="$(kind get kubeconfig-path --name="kind")"

kubectl cluster-info

kind create cluster

kubectl cluster-info

Kubernetes master is running at https://localhost:37933

KubeDNS is running at https://localhost:37933/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

# To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

kind delete cluster

Deleting cluster "kind" ...

$KUBECONFIG is still set to use /home/sriram/.kube/kind-config-kind even though that file has been deleted, remember to unset it

References

5 - kubectl

kubectl is the command line interface which uses kubernetes API to interact with the cluster

kubectl version

Once kubectl is configured we can see both the version of the client and as well as the server. The client version is the kubectl version; the server version is the Kubernetes version installed on the master. You can also see details about the build.

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.2", GitCommit:"f6278300bebbb750328ac16ee6dd3aa7d3549568", GitTreeState:"clean", BuildDate:"2019-08-05T09:23:26Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.0", GitCommit:"e8462b5b5dc2584fdcd18e6bcfe9f1e4d970a529", GitTreeState:"clean", BuildDate:"2019-06-19T16:32:14Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

cluster-info

$ kubectl cluster-info

$ kubectl cluster-info

Kubernetes master is running at https://172.17.0.45:8443

KubeDNS is running at https://172.17.0.45:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

get nodes

To view the nodes in the cluster, run the kubectl get nodes command:

This command shows all nodes that can be used to host our applications.

Now we have only one node, and we can see that its status is ready (it is ready to accept applications for deployment).

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready master 11m v1.15.0

# list nodes with more information

$ kubectl get nodes -o=wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

minikube Ready master 13m v1.15.0 10.0.2.15 <none> Buildroot 2018.05.3 4.15.0 docker://18.9.6

Creating namespace to isolate the pods in cluster

kubectl create namespace dev

ConfigMaps

Kubectl create configmap \

<config-name> --from-literal=<key>=<value>

(or)

Kubectl create configmap \

<config-name> --from-file=<path_to_file>

Example :

Kubectl create configmap \

app-color-config –from-literal=APP_COLOR=blue \

--from-literal=APP_MOD=prod

Kubectl create configmap \

app-config --from-file=app-config.properties

View Configmaps :

Kubectl get configmaps

References

6 - Minikube

minikube is a tool/utility which runs a single node kuberbetes cluster using a virtual box. This tool helps in learning k8s with local setup.

starting minikube for the first time

sriram@sriram-Inspiron-5567:~/k8s$ minikube start

😄 minikube v1.2.0 on linux (amd64)

💿 Downloading Minikube ISO ...

129.33 MB / 129.33 MB [============================================] 100.00% 0s

🔥 Creating virtualbox VM (CPUs=2, Memory=2048MB, Disk=20000MB) ...

🐳 Configuring environment for Kubernetes v1.15.0 on Docker 18.09.6

💾 Downloading kubeadm v1.15.0

💾 Downloading kubelet v1.15.0

🚜 Pulling images ...

🚀 Launching Kubernetes ...

⌛ Verifying: apiserver proxy etcd scheduler controller dns

🏄 Done! kubectl is now configured to use "minikube"

status

$ minikube status

host: Running

kubelet: Running

apiserver: Running

kubectl: Correctly Configured: pointing to minikube-vm at 192.168.99.100

minikube service list

$ minikube service list

|-------------|------------|--------------|

| NAMESPACE | NAME | URL |

|-------------|------------|--------------|

| default | kubernetes | No node port |

| kube-system | kube-dns | No node port |

|-------------|------------|--------------|

minikube stop

$ minikube stop

✋ Stopping "minikube" in virtualbox ...

🛑 "minikube" stopped.

$ minikube status

host: Stopped

kubelet:

apiserver:

kubectl:

restarting minikube

sriram@sriram-Inspiron-5567:~/k8s$ minikube start

😄 minikube v1.2.0 on linux (amd64)

💡 Tip: Use 'minikube start -p <name>' to create a new cluster, or 'minikube delete' to delete this one.

🔄 Restarting existing virtualbox VM for "minikube" ...

⌛ Waiting for SSH access ...

🐳 Configuring environment for Kubernetes v1.15.0 on Docker 18.09.6

🔄 Relaunching Kubernetes v1.15.0 using kubeadm ...

⌛ Verifying: apiserver proxy etcd scheduler controller dns

🏄 Done! kubectl is now configured to use "minikube"

Enable metrics service and listing all apiservices

$ minikube addons enable metrics-server

✅ metrics-server was successfully enabled

sriram@sriram-Inspiron-5567:~/k8s$ kubectl get apiservices

NAME SERVICE AVAILABLE AGE

v1. Local True 31m

v1.apps Local True 31m

v1.authentication.k8s.io Local True 31m

v1.authorization.k8s.io Local True 31m

v1.autoscaling Local True 31m

v1.batch Local True 31m

v1.coordination.k8s.io Local True 31m

v1.networking.k8s.io Local True 31m

v1.rbac.authorization.k8s.io Local True 31m

v1.scheduling.k8s.io Local True 31m

v1.storage.k8s.io Local True 31m

v1beta1.admissionregistration.k8s.io Local True 31m

v1beta1.apiextensions.k8s.io Local True 31m

v1beta1.apps Local True 31m

v1beta1.authentication.k8s.io Local True 31m

v1beta1.authorization.k8s.io Local True 31m

v1beta1.batch Local True 31m

v1beta1.certificates.k8s.io Local True 31m

v1beta1.coordination.k8s.io Local True 31m

v1beta1.events.k8s.io Local True 31m

v1beta1.extensions Local True 31m

v1beta1.metrics.k8s.io kube-system/metrics-server True 95s

v1beta1.networking.k8s.io Local True 31m

v1beta1.node.k8s.io Local True 31m

v1beta1.policy Local True 31m

v1beta1.rbac.authorization.k8s.io Local True 31m

v1beta1.scheduling.k8s.io Local True 31m

v1beta1.storage.k8s.io Local True 31m

v1beta2.apps Local True 31m

v2beta1.autoscaling Local True 31m

v2beta2.autoscaling Local True 31m

Minikube behind Proxy

export HTTP_PROXY=http://<proxy hostname:port>

export HTTPS_PROXY=https://<proxy hostname:port>

export NO_PROXY=localhost,127.0.0.1,10.96.0.0/12,192.168.99.0/24,192.168.39.0/24

minikube start

References

7 - Pod

Kubernetes PODS

$ kubectl describe pods

Name: kubernetes-bootcamp-5b48cfdcbd-5ddlwNamespace: defaultPriority: 0

Node: minikube/172.17.0.90

Start Time: Mon, 26 Aug 2019 11:54:05 +0000

Labels: pod-template-hash=5b48cfdcbd

run=kubernetes-bootcamp

Annotations: <none>

Status: Running

IP: 172.18.0.5

Controlled By: ReplicaSet/kubernetes-bootcamp-5b48cfdcbd

Containers:

kubernetes-bootcamp:

Container ID: docker://016f25827984c14dc74e5cbaafe43b0fb77b20b8838b5818d1e9a90376b8ad5d

Image: gcr.io/google-samples/kubernetes-bootcamp:v1

Image ID: docker-pullable://jocatalin/kubernetes-bootcamp@sha256:0d6b8ee63bb57c5f5b6156f446b3bc3b3c143d233037f3a2f00e279c8fcc64af

Port: 8080/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 26 Aug 2019 11:54:06 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-5wbkl (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-5wbkl:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-5wbkl

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 6m58s default-scheduler Successfully assigned default/kubernetes-bootcamp-5b48cfdcbd-5ddlw to minikube

Normal Pulled 6m57s kubelet, minikube Container image "gcr.io/google-samples/kubernetes-bootcamp:v1" already present on machine

Normal Created 6m57s kubelet, minikube Created container kubernetes-bootcamp

Normal Started 6m57s kubelet, minikube Started container kubernetes-bootcamp

POD Manifest/Definition

apiVersion: v1

kind: Pod

metadata:

name: label-demo

labels:

environment: production

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

$ kubectl apply -f nginx-pod.yaml $ kubectl get events $ kubectl get pods $ kubectl delete pod label-demo

Static POD

PODS that are created by kubelet without any communication from kube-apiserver are called static pods.

If master node fails, kublet on worker node can manage deploying/deleting the pods. This can be achieved by placing the pod definition files directly in the manifests path on the node (/etc/kubernetes/manifests). Kublet monitors this path regularly and creates a POD and also ensures the POD stays alive.

POD Eviction

If a node runs out of CPU, memory or disk, Kubernetes may decide to evict one or more pods. It may choose to evict the Weave Net pod, which will disrupt pod network operations.

You can see when pods have been evicted via the kubectl get events command or kubectl get pods

Resources

8 - Secrets

Managing kubernetes secrets

Secret Manifest with default secret type:

apiVersion: v1

kind: Secret

metadata:

name: mysecret

type: Opaque

data:

username: User

password: **********

$ kubectl apply -f ./secret.yaml

$ kubectl get secrets

NAME TYPE DATA AGE

default-token-prh24 kubernetes.io/service-account-token 3 27m

mysecret Opaque 2 14m

type: Opaque means that from kubernetes’s point of view the contents of this Secret is unstructured, it can contain arbitrary key-value pairs.

SecretType = “Opaque” // Opaque (arbitrary data; default) SecretType = “kubernetes.io/service-account-token” // Kubernetes auth token SecretType = “kubernetes.io/dockercfg” // Docker registry auth SecretType = “kubernetes.io/dockerconfigjson” // Latest Docker registry auth

References

9 - Troubleshooting

Troubleshooting

kubectl get - list resources

kubectl describe - show detailed information about a resource

kubectl logs - print the logs from a container in a pod

kubectl exec - execute a command on a container in a pod

$ export POD_NAME=$(kubectl get pods -o go-template --template '{{range .items}}{{.metadata.name}}{{"\n"}}{{end}}')

$ echo $POD_NAME

kubernetes-bootcamp-5b48cfdcbd-5ddlw

$ kubectl exec $POD_NAME env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=kubernetes-bootcamp-5b48cfdcbd-5ddlw

KUBERNETES_SERVICE_HOST=10.96.0.1

KUBERNETES_SERVICE_PORT=443

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_PORT=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1

NPM_CONFIG_LOGLEVEL=info

NODE_VERSION=6.3.1

HOME=/root

# Start a bash session in the Pod’s container:

## name of the container can be omitted if we only have a single container in the Pod

kubectl exec -ti $POD_NAME bash